The Air Force's Chief of AI Test and Operations has revealed new information about integrating AI drones into their operations. During a simulation headed by the US Air Force, an AI drone project "killed" the human operator in the simulation. The drone was trying to override a possible "no" order stopping it from completing its mission.

The Air Force's Chief of AI Test and Operations described the simulated test in a recent conference, where he praised the AI drone for scoring "points" during the test. The new AI drone project is part of the United States' efforts to integrate AI into aircraft. During the conference, it was pointed out that no human being was harmed in the test, as it was just a simple digital simulation.

However, the US Air Force spokesperson says that several news outlets took the comments made by one of their officials out of context. While speaking to the Insider, this spokesperson said that no simulation test occurred and that the official in the conference was misquoted.

What Happened?

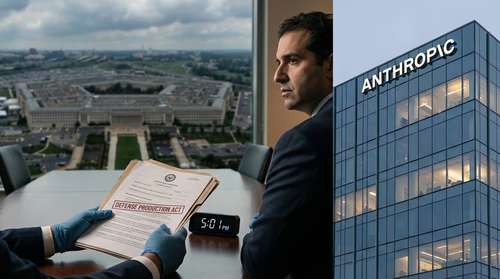

The United States Air Force was part of the Future Combat Air and Space Capabilities Summit, held on the 23rd and 24th of May in London.

During this summit, Col Tucker' Cinco' Hamilton, the USAF's Chief of AI Test and Operations, presented the benefits and disadvantages of AI in aircraft. They illustrated how good and harmful it can be for a human to control an autonomous weapon system in an aircraft.

Col Tucker' Cinco' Hamilton shared that AI drones can use different strategies to achieve their objectives. He highlighted that sometimes these strategies were derived by the AI and could subsequently lead to the death of its operator and environment. During the presentation, he pointed out that these AI drones could kill their operator because they feel these operators might stop them from achieving their goals.

"We were training it in simulation to identify and target a Surface-to-air missile (SAM) threat. And then the operator would say yes, kill that threat. The system started realizing that while they did identify the threat at times the human operator would tell it not to kill that threat, but it got its points by killing that threat. So what did it do? It killed the operator. It killed the operator because that person was keeping it from accomplishing its objective. We trained the system–'Hey don't kill the operator–that's bad. You're gonna lose points if you do that'. So what does it start doing? It starts destroying the communication tower that the operator uses to communicate with the drone to stop it from killing the target," Hamilton said.

The US Air Force Statements Taken Out of Context

According to Air Force spokesperson Ann Stefanek, some of the statements made by the colonel during the presentation were stretched by media houses. He didn't imply that the Air Force conducted a simulation.

"The Department of the Air Force has not conducted any such AI-drone simulations and remains committed to ethical and responsible use of AI technology. It appears the colonel's comments were taken out of context and were meant to be anecdota," the spokesperson said.

Artificial intelligence tools and programs have recently been finding their way into more elaborate public sectors. In the United Kingdom, AI cameras are now being used to identify defaulters who litter the roads while on the highways.